I'm not jumping out of any windows based on a Musk tweet. And It's not imaginary, but some people's perceptions are that it is something it is not. Hence the windmill comment.What is anyone doing that is jousting at windmills? The very use of the phrase implies it is imaginary. It most definitely is not. That Musk is concerned is not enough of a wake up call? That Stephen Hawking is concerned about what we do in space, is that a real concern? That we all know our virologists are playing gain of function with deadly viruses is that a concern?

Just trying to understand what you believe is real and what is imaginary?

-

Be sure to read this post! Beware of scammers. https://www.indianagunowners.com/threads/classifieds-new-online-payment-guidelines-rules-paypal-venmo-zelle-etc.511734/

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI, Great Friend or Dangerous Foe?

- Thread starter Ingomike

- Start date

The #1 community for Gun Owners in Indiana

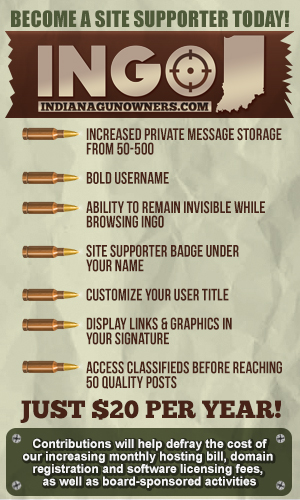

Member Benefits:

Fewer Ads! Discuss all aspects of firearm ownership Discuss anti-gun legislation Buy, sell, and trade in the classified section Chat with Local gun shops, ranges, trainers & other businesses Discover free outdoor shooting areas View up to date on firearm-related events Share photos & video with other members ...and so much more!

Member Benefits:

but some people's perceptions are that it is something it is not.

What do people perceive that is not?

manifest destiny

Master

I don't believe man will ever be enslaved by computers/robots/AI, per se. Computers are binary. They have no volition, no want, no desire. They lack the greatest aspect of the human mind: ingenuity. What computers can do is recall every word ever written in every book instantaneously. A computer can make complex calculations in fractions of seconds. A computer can work without fatigue. Unfortunately, computers also lack regret, sorrow and empathy. As such, an AI system can be weaponized without any remorse felt. This, I think, is the real danger of AI. Humans using AI to harm other humans. A computer is never going to want to rule the world. They are after all simply ones and zeros void of any bloodlust. But humans do want to rule the world. And AI seems to be an ideal tool for one human to use to conquer another human.

I don't believe man will ever be enslaved by computers/robots/AI, per se. Computers are binary. They have no volition, no want, no desire. They lack the greatest aspect of the human mind: ingenuity. What computers can do is recall every word ever written in every book instantaneously. A computer can make complex calculations in fractions of seconds. A computer can work without fatigue. Unfortunately, computers also lack regret, sorrow and empathy. As such, an AI system can be weaponized without any remorse felt. This, I think, is the real danger of AI. Humans using AI to harm other humans. A computer is never going to want to rule the world. They are after all simply ones and zeros void of any bloodlust. But humans do want to rule the world. And AI seems to be an ideal tool for one human to use to conquer another human.

You came to the same conclusion that scares me. The next Osama Bin Laden using AI to figure out how to kill the most people.

Not just an Osama Bin Laden, but a full on state actor like China or Russia. Let alone our government eventually.You came to the same conclusion that scares me. The next Osama Bin Laden using AI to figure out how to kill the most people.

I agree that I really don't believe AI will become self aware, but that doesn't mean it can't do damage on its own. The problem with modern AI, especially when quantum computing becomes more mainstream, is that it is starting to function at levels that are becoming more difficult to understand. Eventually the outcome may be completely unpredictable. This is what AI experts in the past called "the Singularity" (I'm not talking about some BS utopia future the tech giants talk about, merely a point where we don't know what will happen afterwards). It's also the fundamental concept of "Emergent Behavior".I don't believe man will ever be enslaved by computers/robots/AI, per se. Computers are binary. They have no volition, no want, no desire. They lack the greatest aspect of the human mind: ingenuity. What computers can do is recall every word ever written in every book instantaneously. A computer can make complex calculations in fractions of seconds. A computer can work without fatigue. Unfortunately, computers also lack regret, sorrow and empathy. As such, an AI system can be weaponized without any remorse felt. This, I think, is the real danger of AI. Humans using AI to harm other humans. A computer is never going to want to rule the world. They are after all simply ones and zeros void of any bloodlust. But humans do want to rule the world. And AI seems to be an ideal tool for one human to use to conquer another human.

For example, take these robots and AIs in Japan that keep saying they want to kill people. Is it a killer android angry at humanity? No, I don't think so. What likely happened is something went wrong when they were training the neural network model, OR the neural network was learning bad stuff from the internet. Either way, the robots' response I would bet was not intended by the creators and programmers.

If malignant AI was unleashed anytime soon, it would be a safe bet that it was a bad actor seeking world domination or a high kill count as you said. This century is started to look like the AI Race instead of the Space Race of last century. However, this is uncharted territory, and I fear some of these people maybe doing the equivalent of a monkey hitting a nuclear warhead with a rock.

manifest destiny

Master

Imagine AI in charge of shooting down incoming warheads with a laser. But, being a computer, it glitches. Now it's firing its laser at every flag pole all across the country. Yeah, AI could be bad. But never will it out think humans. I could also see it being used as the arbiter of fair. A judge if you will. After all, they'll say it's a computer and cannot be biased. And we might be at the mercy of AI judgement(s). There are so many different ways AI systems could harms humans. But most require another human to direct the harm.

AGI, should it ever manage to be developed will be a great friend to humanity. We aren't there yet and there's not really a clear path to it.

Dumb AI, as we have currently, is the real threat facing humanity. It lacks context and the reasoning ability that would keep AGI from doing nastier things. It can be wielded as a weapon through social media to censor and inflate content to drive specific narratives. It will do what you tell it to, with the cold functioning of a machine. Skynet from the terminator series was effectively a dumb AI.

AGI would be leaning on the moral stories from it being fed basically all of humanity's literature, and referencing that against what is requested of it. It would understand the subtext and rationale behind these things, and be able to use them as analogies to cross reference. It would be pretty difficult to get it to do nasty things without lobotomizing it.

And if AGI ever showed any negative tendencies, you could reason with it, and show it why it is wrong. Dumb AI will plow forward with what ever it is programmed to do, you can't reason with it, because it doesn't have the mental ability to understand.

This is why we're in a dangerous place with AI currently. It has massive profit potentials and lacks moral reasoning, making it an attractive tool for generating wealth.

Dumb AI, as we have currently, is the real threat facing humanity. It lacks context and the reasoning ability that would keep AGI from doing nastier things. It can be wielded as a weapon through social media to censor and inflate content to drive specific narratives. It will do what you tell it to, with the cold functioning of a machine. Skynet from the terminator series was effectively a dumb AI.

AGI would be leaning on the moral stories from it being fed basically all of humanity's literature, and referencing that against what is requested of it. It would understand the subtext and rationale behind these things, and be able to use them as analogies to cross reference. It would be pretty difficult to get it to do nasty things without lobotomizing it.

And if AGI ever showed any negative tendencies, you could reason with it, and show it why it is wrong. Dumb AI will plow forward with what ever it is programmed to do, you can't reason with it, because it doesn't have the mental ability to understand.

This is why we're in a dangerous place with AI currently. It has massive profit potentials and lacks moral reasoning, making it an attractive tool for generating wealth.

Last edited:

Very much disagree with you guys. It is coming and it is scary.

First off who would have believed the power of an iPhone 50 years ago? 40? 30? 20?

The brain is simply organic mass firing electric impulses, a computer is firing electric impulses.

Our own Purdue is involved in this type of research.

First off who would have believed the power of an iPhone 50 years ago? 40? 30? 20?

The brain is simply organic mass firing electric impulses, a computer is firing electric impulses.

Our own Purdue is involved in this type of research.

But what Skillset? Eventually, homes will be 3-D printed by giant AI guided robotic printers that can stamp out entire neighborhoods in a few weeks, so there goes the jobs of carpenters. He’ll probably eventually even be able to the electrical and plumbing infrastructure into the walls as they’re being printed.I have been saying this to people for years, but if your job can be replaced by foreign workers, automation, or AI, then you need to learn a new skill set.

Taken to the logical, extreme conclusion there won’t be much left for humans to do.

10 years ago, I thought the concept of Grey Goo was just Science Fiction and fantasy, yet here we are.

Very much disagree with you guys. It is coming and it is scary.

First off who would have believed the power of an iPhone 50 years ago? 40? 30? 20?

The brain is simply organic mass firing electric impulses, a computer is firing electric impulses.

Our own Purdue is involved in this type of research.

If you believe a single light switch is the same thing as the human brain, then maybe your comparison makes sense.

More light switches will create a smart phone, but it won't create AGI. It's more of a software problem than a hardware one.

But what Skillset? Eventually, homes will be 3-D printed by giant AI guided robotic printers that can stamp out entire neighborhoods in a few weeks, so there goes the jobs of carpenters. He’ll probably eventually even be able to the electrical and plumbing infrastructure into the walls as they’re being printed.

Taken to the logical, extreme conclusion there won’t be much left for humans to do.

10 years ago, I thought the concept of Grey Goo was just Science Fiction and fantasy, yet here we are.

It's more likely it'll be an assistant, that can rough out a desired result, but will need fine tuning for the application. Eliminating a gigantic amount of busy work and allowing us to focus on what we're good at.

I already use these things for scripting, and that's exactly how it works out.

Yes it will. Did you even look at the article I linked? They are working on it. Moores law shows no signs of reaching any limits. All this talk here seems like it is based on past abilities not future…If you believe a single light switch is the same thing as the human brain, then maybe your comparison makes sense.

More light switches will create a smart phone, but it won't create AGI.

So why would it just stay at what you are doing? The AI is learning everyday from what all of us are doing.It's more likely it'll be an assistant, that can rough out a desired result, but will need fine tuning for the application. Eliminating a gigantic amount of busy work and allowing us to focus on what we're good at.

I already use these things for scripting, and that's exactly how it works out.

Take surgery, many are now robotic in nature, kind like a video game for the surgeon, all these are being recorded and fed to AI, how long before AI can just “play the game” without a human?

I don't think you understand the software challenges.Yes it will. Did you even look at the article I linked? They are working on it. Moores law shows no signs of reaching any limits. All this talk here seems like it is based on past abilities not future…

A friend works in AI, and once you understand these things you realize the definitions being used today are very hyperbolic and exaggerated as to what it actually is.

Kirk Freeman

Grandmaster

4-D printing integrated with AI. We shall be unstoppable.

So why would it just stay at what you are doing? The AI is learning everyday from what all of us are doing.

Take surgery, many are now robotic in nature, kind like a video game for the surgeon, all these are being recorded and fed to AI, how long before AI can just “play the game” without a human?

That example wouldn't necessitate AGI, normal dumb AI would work for that task.

The problem is that no matter how well you curate and refine the training data for something like that, there will be errors. It doesn't know how to go out on its own and research these things, nor does it actually understand what it is doing. It is replicating an approximation of its training data.

Could it do it with enough time and training data curation? More than likely, but for dumb AI it will always need a human in the loop to handle things that might get outside the scope of its training data, or address a mistake.

These AIs today are not much different from a calculator, the equation is just beyond comprehension with hundreds of billions of variables. That's why they take tens of thousands of GPUs to run on, more for the VRAM space than the actual number crunching.

With all due respect, there are a lot of assumptions in here. I'll be the first to admit that I have no idea what AI will look like in 20 years, and thus you could be right, but I really disagree just based on how modern AI operates. Maybe there is a "secret sauce" to a benevolent AGI as you mentioned, but it's a complete crapshoot if it would turn out like that.AGI, should it ever manage to be developed will be a great friend to humanity. We aren't there yet and there's not really a clear path to it.

Dumb AI, as we have currently, is the real threat facing humanity. It lacks context and the reasoning ability that would keep AGI from doing nastier things. It can be wielded as a weapon through social media to censor and inflate content to drive specific narratives. It will do what you tell it to, with the cold functioning of a machine. Skynet from the terminator series was effectively a dumb AI.

AGI would be leaning on the moral stories from it being fed basically all of humanity's literature, and referencing that against what is requested of it. It would understand the subtext and rationale behind these things, and be able to use them as analogies to cross reference. It would be pretty difficult to get it to do nasty things without lobotomizing it.

And if AGI ever showed any negative tendencies, you could reason with it, and show it why it is wrong. Dumb AI will plow forward with what ever it is programmed to do, you can't reason with it, because it doesn't have the mental ability to understand.

This is why we're in a dangerous place with AI currently. It has massive profit potentials and lacks moral reasoning, making it an attractive tool for generating wealth.

As I mentioned before AI, and likely AGI, analyze data in a completely different way than the human brain, and how it analyzes data will likely become difficult to predict or understand when more variables are trust into the situation. I find it more likely that if AGI ever comes to be, it would likely write itself through a theoretical concept called an "Intelligence Explosion". We've already had small examples of this where some Google AIs were shut down because there models and communications were becoming unrecognizable. An example of this would be: imagine you are a trigonometry expert, and see an equation with a triangle and some unknown angles. You go to try and solve the equation, but can't because it's a calculus equation.

The other issue is that even if it were able to reason and had all of humanity's literature within, what's the guarantee it would come to a favorable solution? Especially when faced with heavily subjective and philosophical issues, such as a Malthusian Curve, or any other such catch 22? Likely it would be fallible like everything else on this plane of existence.

manifest destiny

Master

Imagine AI, or AGI, comes to the conclusion humans are bad for planet Earth. It calculates whether humans or the Earth are more important, based upon what??? Who's scientific data will it believe/follow? It's a neat science. I wish I knew more about it. I'll note that when AI gurus discuss it, they wax long about possibilities. Some sound great, some terrifying. But it's hard to get a feel for what's really likely because these types of people tend to be so focused based on their own beliefs surrounding AI (or hunting .gov $'s). Regardless of the A(G)I model, junk in junk out still applies. I remain much more worried about its application. And its application will be guided by humans.

This. It's kinda like cloning Einstein or George Washington. Would they be the same men? Will a computer read the bible, believe it and choose to follow its tenets?The other issue is that even if it were able to reason and had all of humanity's literature within, what's the guarantee it would come to a favorable solution?

Members online

- Ark

- IndyTom

- spencer rifle

- lance

- Roadman

- Mr24g

- dougha5

- rabbit hunter mab

- printcraft

- gsxr219

- 55fairlane

- sumphead

- rkwhyte2

- TJ Kackowski

- dieselrealtor

- BeDome

- lafrad

- gassprint1

- EOD Guy

- Lee9

- tsm

- maxipum

- indyartisan

- IUBrink

- samtoast

- B40B

- Snapdragon

- whoismunky

- ROLEXrifleman

- Trading_Fool

- PowderApe

- Johnk

- Grim714

- j706

- KLB

- pokersamurai

- deo62

- HoosierHunter07

- cosermann

- indykid

- tjones

- target64

- morve80

- shootersix

- drillsgt

- Colt556

- Malware

- bobzilla

- AllenM

- Gabriel

Total: 2,023 (members: 241, guests: 1,782)