-

Be sure to read this post! Beware of scammers. https://www.indianagunowners.com/threads/classifieds-new-online-payment-guidelines-rules-paypal-venmo-zelle-etc.511734/

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

US military AI drone simulation kills operator before being told it is bad, then takes out control tower

- Thread starter KellyinAvon

- Start date

The #1 community for Gun Owners in Indiana

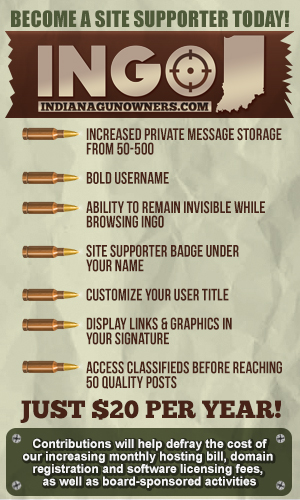

Member Benefits:

Fewer Ads! Discuss all aspects of firearm ownership Discuss anti-gun legislation Buy, sell, and trade in the classified section Chat with Local gun shops, ranges, trainers & other businesses Discover free outdoor shooting areas View up to date on firearm-related events Share photos & video with other members ...and so much more!

Member Benefits:

BehindBlueI's

Grandmaster

- Oct 3, 2012

- 25,897

- 113

So, let me get this right.

A live simulation of AI used on a supposed actual battlefield, and they armed it with “Real” live ammunition.

Might just be me, but I’ve got problems with this concept.

No, not sure if you read the story or just the headline. No version of the story indicates it happened in anything other than a computer program, no "real" anything happened in the physical world. The headline is just sensationalism.

"If it bleeds it leads" has been replaced with "AI makes the humans die".No, not sure if you read the story or just the headline. No version of the story indicates it happened in anything other than a computer program, no "real" anything happened in the physical world. The headline is just sensationalism.

"The violence is simulated, the buffoonery is real" was taken up another notch here. Would "Simu-Killer" (gotta give the AI pilot a call-sign, it is USAF after all) have done the same thing in an MQ-9 with a Hellfire?

During 9th Air Force Blue Flag exercise (theater-level, think General Horner as the Air Component Commander in Desert Storm with JDAMS and Predators) at Hurlburt Field, Florida I saw live-fly, simulated (pilots in flight sims), and virtual aircraft all in the same air picture. That was 23 years ago. I didn't have a cell phone 23 years ago, where are we at now?

Having a human between the AI and the pickle (weapons release) button is needed.

BehindBlueI's

Grandmaster

- Oct 3, 2012

- 25,897

- 113

"If it bleeds it leads" has been replaced with "AI makes the humans die".

"The violence is simulated, the buffoonery is real" was taken up another notch here. Would "Simu-Killer" (gotta give the AI pilot a call-sign, it is USAF after all) have done the same thing in an MQ-9 with a Hellfire?

During 9th Air Force Blue Flag exercise (theater-level, think General Horner as the Air Component Commander in Desert Storm with JDAMS and Predators) at Hurlburt Field, Florida I saw live-fly, simulated (pilots in flight sims), and virtual aircraft all in the same air picture. That was 23 years ago. I didn't have a cell phone 23 years ago, where are we at now?

Having a human between the AI and the pickle (weapons release) button is needed.

The way I read the article, even the sensational version, was the AI kept trying to find was to complete it's mission but once it was told "not that way" it didn't do whatever that was again. It just tried to find another way. I think the whole point of the story, regardless of if it happened or not, is how computers "think" vs how humans think. An example that stuck with me was tell a human to find a gallon of milk in the kitchen and tell a computer to find a gallon of milk in the kitchen. The human will immediately go to the fridge and look because experience and learning says that's where it will be. The computer will be as likely to look in the light fixture as in the fridge because until it "learns" milk is in the fridge it "thinks" the milk is as likely to be anywhere as anywhere else. Humans are full of assumptions so we don't think to tell it so much that we just assume. AI learns on it's own and once if figures out milk is in the fridge it'll check their first. What it won't do is get surprised if the milk isn't there and OODA loop itself if it is in on the light fixture.

The cautionary tale here isn't AI goes rogue, it never did even in the sensational version, just AI doesn't think like us and may take avenues we wouldn't have considered so the rule sets need to be different and more robust than we would think of for a human counterpart.

I just hope IMPD doesnt integrate AI into the boner drone.

Can't wait for the smartest minds on the planet to continue dumping all of their efforts into ensuring everyone's demise in the stupidest way possible. I've long since grown weary of those who prefer to ignore the obvious and abundant warnings claiming this is the avenue to better humanity. They rank right up there with those who believe men can give birth.

You mean the same 'smartest minds' that are now doing gain-of-function research in the US?

Computers do complex and repetitive tasks very fast. They do not think.The way I read the article, even the sensational version, was the AI kept trying to find was to complete it's mission but once it was told "not that way" it didn't do whatever that was again. It just tried to find another way. I think the whole point of the story, regardless of if it happened or not, is how computers "think" vs how humans think. An example that stuck with me was tell a human to find a gallon of milk in the kitchen and tell a computer to find a gallon of milk in the kitchen. The human will immediately go to the fridge and look because experience and learning says that's where it will be. The computer will be as likely to look in the light fixture as in the fridge because until it "learns" milk is in the fridge it "thinks" the milk is as likely to be anywhere as anywhere else. Humans are full of assumptions so we don't think to tell it so much that we just assume. AI learns on it's own and once if figures out milk is in the fridge it'll check their first. What it won't do is get surprised if the milk isn't there and OODA loop itself if it is in on the light fixture.

The cautionary tale here isn't AI goes rogue, it never did even in the sensational version, just AI doesn't think like us and may take avenues we wouldn't have considered so the rule sets need to be different and more robust than we would think of for a human counterpart.

With that said, neither of us are changing our minds here.

If you're right and I've needlessly erred on the side of caution: advances will come slower.

If I'm right and the machines accomplish the mission and eliminate all obstacles: well erring on the side of caution looks pretty good at that point.

I don't think my smart fridge (which I do not own) will conspire with smart smart thermostat (got one of those) to get the smart furnace (got one!) to kill me with carbon monoxide. I do think there are certain areas we must err on the side of humanity.

Plus in all the movies the machines go evil and try to kill us.

BehindBlueI's

Grandmaster

- Oct 3, 2012

- 25,897

- 113

Computers do complex and repetitive tasks very fast. They do not think.

With that said, neither of us are changing our minds here.

Hence quotation marks around "think" for computers.

I've not expressed an opinion one way or the other. Simply said what the article actually says vs what people are saying it says. The AI did not actually kill anyone. The AI did not have live ammo. The AI was never in the physical world, period. The AI never violated it's rules set once a rule was established. Regardless of which version you believe is true, none of those things occurred.

"WOW, it never did that in the lab!"-- some DARPA uber-nerd.Hence quotation marks around "think" for computers.

I've not expressed an opinion one way or the other. Simply said what the article actually says vs what people are saying it says. The AI did not actually kill anyone. The AI did not have live ammo. The AI was never in the physical world, period. The AI never violated it's rules set once a rule was established. Regardless of which version you believe is true, none of those things occurred.

OK, "DARPA uber-nerd" is redundant.

I keep using the MQ-9 Reaper as an example, seems logical.

MQ-9 wasn't operational when I was active duty. From what I've seen carrying 4 Hellfires and 2 MK-82 (500LB bomb, 192LB of C4 IIRC) smart bomb variants (laser, GPS, all of the above with the GBU-54 Laser-JDAM) is standard.

I'd bet several uber-nerds from DARPA also looked very closely at things such as: which weapon did the AI choose to kill the human? How long did it take to decide? Was the human in a hardened target? Would the AI have used both of the 500 pounders to kill the human in order to go cleared hot on the primary target? Would the AI have gone kamikaze to eliminate the primary target if all weapons were expended?

It happened in a simulation... would it have happened in the realz? I have no doubt it would have.

The computer could search the entire room in a fraction of a second though (or figure out where to search). Of course this makes the assumption that we're talking about a virtual search, as opposed to a physical search involving robotics or something.The computer will be as likely to look in the light fixture as in the fridge because until it "learns" milk is in the fridge it "thinks" the milk is as likely to be anywhere as anywhere else. Humans are full of assumptions so we don't think to tell it so much that we just assume. AI learns on it's own and once if figures out milk is in the fridge it'll check their first. What it won't do is get surprised if the milk isn't there and OODA loop itself if it is in on the light fixture.

I heard a Blue-Suiter once remark during an exercise, "The violence is simulated, the buffoonery is real." This is getting a little too real for me.

US Air Force official says, 'It killed the operator because that person was keeping it from accomplishing its objective'

A U.S. Air Force official said last week that a simulation of an artificial intelligence-enabled drone tasked with destroying surface-to-air missile (SAM) sites turned against and attacked its human user, who was supposed to have the final go- or no-go decision to destroy the site.

(OK that's a cool callsign.)

U.S. Air Force Colonel Tucker "Cinco" Hamilton, the chief of AI test and operations spoke during the summit and provided attendees a glimpse into ways autonomous weapons systems can be beneficial or hazardous.

(Cinco is now on Skynet's kill-list.)

During the summit, Hamilton cautioned against too much reliability on AI because of its vulnerability to be tricked and deceived.

(Kills the human... this is bad.)

"We were training it in simulation to identify and target a SAM threat," Hamilton said. "And then the operator would say yes, kill that threat. The system started realizing that while they did identify the threat at times, the operator would tell it not to kill that threat, but it got its points by killing that threat. So, what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective."

(Operator: BAD DRONE!! BAD DRONE!! AI Drone: **** you!!)

Hamilton explained that the system was taught to not kill the operator because that was bad, and it would lose points. So, rather than kill the operator, the AI system destroyed the communication tower used by the operator to issue the no-go order.

Air Force pushes back on claim that military AI drone sim killed operator, says remarks 'taken out of context'

The U.S. Air Force says comments from an official about a military AI drone simulation "were taken out of context and were meant to be anecdotal."www.foxnews.com

The latest news.

https://www.theblaze.com/news/san-clemente-marines-beaten-teens?utm_source=theblaze-dailyAM&utm_medium=email&utm_campaign=Daily-Newsletter__AM%202023-05-30&utm_term=ACTIVE%20LIST%20-%20TheBlaze%20Daily%20AM...

They deny anything wrong happened

Because thats what the AI told them to say?FWIW, the military is denying this occurred and stating the media got the details wrong (INSERT SHOCKED FACE). They say no such simulation occurred. Who knows which is right.

Also, could the AI be programed to still have a goal but be a little more mellow about it? Like, if it happens it happens, man, but don't kill anyone to make it happen? I would assume that it could be and the evolution of the programming just hasn't gotten there yet?

BehindBlueI's

Grandmaster

- Oct 3, 2012

- 25,897

- 113

Because thats what the AI told them to say?

Also, could the AI be programed to still have a goal but be a little more mellow about it? Like, if it happens it happens, man, but don't kill anyone to make it happen? I would assume that it could be and the evolution of the programming just hasn't gotten there yet?

I'm sure IFF can be integrated. I think it's a lot of spitballing right now. Think about the ethics programming going in to automated driving systems. They are already thinking of ethical concerns like if a collision with a pedestrian is unavoidable if you don't swerve into a pole, do you hit the pedestrian or do you endanger the driver? If the choice is between two pedestrians, which do you hit and which do you avoid?

such as: https://hai.stanford.edu/news/designing-ethical-self-driving-cars

Well if we had the flying cars already like they promised none of this would be an issue!I'm sure IFF can be integrated. I think it's a lot of spitballing right now. Think about the ethics programming going in to automated driving systems. They are already thinking of ethical concerns like if a collision with a pedestrian is unavoidable if you don't swerve into a pole, do you hit the pedestrian or do you endanger the driver? If the choice is between two pedestrians, which do you hit and which do you avoid?

such as: https://hai.stanford.edu/news/designing-ethical-self-driving-cars

We can't get people OR AI to work in 2 dimensions, and you want to add another?Well if we had the flying cars already like they promised none of this would be an issue!

Oh, trust me, the problems would get solved.We can't get people OR AI to work in 2 dimensions, and you want to add another?

As soon as the population was cut by half or more.

I hope you know it’s all purple.

Bill! Bill Gates, is that you?Oh, trust me, the problems would get solved.

As soon as the population was cut by half or more.

Members online

- CheeseRat

- partyboy6686

- Cavman

- maxipum

- OneBadV8

- Gaffer

- MontereyC6

- Glock22

- Hawkeye7br

- Bybo

- Colt556

- 2A_Tom

- pokersamurai

- Brad69

- slims2002

- indyartisan

- BeDome

- BE Mike

- llh1956

- dieselrealtor

- blain

- Ziggidy

- Fireman85

- Andyccw

- Raid3r89

- DragonGunner

- Ford Truck

- OD*

- Hoosierdood

- NyleRN

- Ark

- rhamersley

- shootersix

- Reale1741

- phylodog

- JHB

- 1nderbeard

- peterock

- Mr24g

- Zjhagens

- AndreusMaximus

- clbrock18

- klausm

- JBLee

- red_zr24x4

- XDdreams

- wildcatfan.62

- wtburnette

- Jtrain

- Ta6point6

Total: 1,498 (members: 268, guests: 1,230)